NESTML dopamine-modulated STDP synapse tutorial

Some text in this this notebook is copied verbatim from [1]. Network diagrams were modeled after [2].

Pavlov and Thompson (1902) first described classical conditioning: a phenomenon in which a biologically potent stimulus–the Unconditional Stimulus (UC)—is initially paired with a neutral stimulus—the Conditional Stimulus (CS). After many trials, learning is observed when the previously neutral stimuli start to elicit a response similar to that which was previously only elicited by the biologically potent stimulus. Pavlov and Thompson performed many experiments with dogs, observing their response (by monitoring salivation) to the appearance of a person who has been feeding them and the actual food appearing (UC). He demonstrated that the dogs started to salivate in the presence of the person who has been feeding them (or any other CS), rather than just when the food appears, because the CS had previously been associated with food.

Image credits: https://www.psychologicalscience.org/observer/revisiting-pavlovian-conditioning

In this tutorial, a dopamine-modulated STDP model is created in NESTML, and we characterize the model before using it in a network (reinforcement) learning task.

Model

Izhikevich (2007) revisits an important question: how does an animal know which of the many cues and actions preceding a reward should be credited for the reward? Izhikevich explains that dopamine-modulated STDP has a built-in instrumental conditioning property, i.e., the associations between cues, actions and rewards are learned automatically by reinforcing the firing patterns (networks of synapses) responsible, even when the firings of those patterns are followed by a delayed reward or masked by other network activity.

To achieve this, each synapse has an eligibility trace \(C\):

where \(\tau_C\) is the decay time constant of the eligibility trace and \(\text{STDP}(\Delta t)\) represents the magnitude of the change to make to the eligibility trace in response to a pair of pre- and post-synaptic spikes with temporal difference \(\Delta t = t_\text{post} − t_\text{pre}\). (This is just the usual STDP rule, see https://nestml.readthedocs.io/en/latest/tutorials/stdp_windows/stdp_windows.html.) Finally, \(\delta(t − t_\text{pre/post})\) is a Dirac delta function used to apply the effect of STDP to the trace at the times of pre- or post-synaptic spikes.

The concentration of dopamine is described by a variable \(D\):

where \(\tau_d\) is the time constant of dopamine re-absorption, \(D_c\) is a real number indicating the increase in dopamine concentration caused by each incoming dopaminergic spike; \(t_d^f\) are the times of these spikes.

Equations (1, 2) are then combined to calculate the change in synaptic strength \(W\):

When a post-synaptic spike arrives very shortly after a pre-synaptic spike, a standard STDP rule would immediately potentiate the synaptic strength. However, when using the three-factor STDP rule, this potentiation would instead be applied to the eligibility trace.

Because changes to the synaptic strength are gated by dopamine concentration \(D\) (Equation 3), changes are only made to the synaptic strength if \(D \neq 0\). Furthermore, if the eligibility trace has decayed back to 0 before any dopaminergic spikes arrive, the synaptic strength will also not be changed.

[1]:

%matplotlib inline

from typing import List, Optional

import matplotlib as mpl

mpl.rcParams['axes.formatter.useoffset'] = False

mpl.rcParams['axes.grid'] = True

mpl.rcParams['grid.color'] = 'k'

mpl.rcParams['grid.linestyle'] = ':'

mpl.rcParams['grid.linewidth'] = 0.5

mpl.rcParams['figure.dpi'] = 120

mpl.rcParams['figure.figsize'] = [8., 3.]

import matplotlib.pyplot as plt

import nest

import numpy as np

import os

import random

import re

from pynestml.codegeneration.nest_code_generator_utils import NESTCodeGeneratorUtils

/home/charl/.local/lib/python3.11/site-packages/matplotlib/projections/__init__.py:63: UserWarning: Unable to import Axes3D. This may be due to multiple versions of Matplotlib being installed (e.g. as a system package and as a pip package). As a result, the 3D projection is not available.

warnings.warn("Unable to import Axes3D. This may be due to multiple versions of "

-- N E S T --

Copyright (C) 2004 The NEST Initiative

Version: 3.6.0-post0.dev0

Built: Mar 26 2024 08:52:51

This program is provided AS IS and comes with

NO WARRANTY. See the file LICENSE for details.

Problems or suggestions?

Visit https://www.nest-simulator.org

Type 'nest.help()' to find out more about NEST.

Generating code with NESTML

To generate fast code, NESTML needs to process the synapse model together with the neuron model that will be its postsynaptic partner in the network instantiantion (see https://nestml.readthedocs.io/en/latest/nestml_language/synapses_in_nestml.html#generating-code).

In this tutorial, we will use a very simple integrate-and-fire model, where arriving spikes cause an instantaneous increment of the membrane potential, the “iaf_psc_delta” model.

We first define a helper function to generate the C++ code for the models, build it as a NEST extension module, and load the module into the kernel. The resulting model names are composed of associated neuron and synapse partners, because of the co-generation, for example, “stdp_synapse__with_iaf_psc_delta” and “iaf_psc_delta__with_stdp_synapse”.

Because NEST does not support un- or reloading of modules at the time of writing, we implement a workaround that appends a unique number to the name of each generated model, for example, “stdp_synapse_3cc945f__with_iaf_psc_delta_3cc945f” and “iaf_psc_delta_3cc945f__with_stdp_synapse_3cc945f”.

The resulting neuron and synapse model names are returned by the function, so we do not have to think about these internals.

Formulating the model in NESTML

We now go on to define the full synapse model in NESTML:

[2]:

nestml_stdp_dopa_model = """

model neuromodulated_stdp_synapse:

state:

w real = 1.

n real = 0. # Neuromodulator concentration

c real = 0. # Eligibility trace

pre_tr real = 0.

post_tr real = 0.

parameters:

d ms = 1 ms @nest::delay

tau_tr_pre ms = 20 ms # STDP time constant for weight changes caused by pre-before-post spike pairings.

tau_tr_post ms = 20 ms # STDP time constant for weight changes caused by post-before-pre spike pairings.

tau_c ms = 1000 ms # Time constant of eligibility trace

tau_n ms = 200 ms # Time constant of dopaminergic trace

b real = 0. # Dopaminergic baseline concentration

Wmax real = 200. # Maximal synaptic weight

Wmin real = 0. # Minimal synaptic weight

A_plus real = 1. # Multiplier applied to weight changes caused by pre-before-post spike pairings. If b (dopamine baseline concentration) is zero, then A_plus is simply the multiplier for facilitation (as in the stdp_synapse model). If b is not zero, then A_plus will be the multiplier for facilitation only if n - b is positive, where n is the instantenous dopamine concentration in the volume transmitter. If n - b is negative, A_plus will be the multiplier for depression.

A_minus real = 1.5 # Multiplier applied to weight changes caused by post-before-pre spike pairings. If b (dopamine baseline concentration) is zero, then A_minus is simply the multiplier for depression (as in the stdp_synapse model). If b is not zero, then A_minus will be the multiplier for depression only if n - b is positive, where n is the instantenous dopamine concentration in the volume transmitter. If n - b is negative, A_minus will be the multiplier for facilitation.

A_vt real = 1. # Multiplier applied to dopa spikes

equations:

pre_tr' = -pre_tr / tau_tr_pre

post_tr' = -post_tr / tau_tr_post

internals:

tau_s 1/ms = (tau_c + tau_n) / (tau_c * tau_n)

input:

pre_spikes <- spike

post_spikes <- spike

mod_spikes <- spike

output:

spike

onReceive(mod_spikes):

n += A_vt / tau_n

onReceive(post_spikes):

post_tr += 1.

# facilitation

c += A_plus * pre_tr

onReceive(pre_spikes):

pre_tr += 1.

# depression

c -= A_minus * post_tr

# deliver spike to postsynaptic partner

emit_spike(w, d)

# update from time t to t + resolution()

update:

integrate_odes()

# resolution() returns the timestep to be made (in units of time)

# the sequence here matters: the update step for w requires the "old" values of c and n

w -= c * ( n / tau_s * expm1( -tau_s * resolution() ) \

- b * tau_c * expm1( -resolution() / tau_c ))

w = max(0., w)

c = c * exp(-resolution() / tau_c)

n = n * exp(-resolution() / tau_n)

"""

Generate the code, build the user module and make the model available to instantiate in NEST:

[3]:

# generate and build code

module_name, neuron_model_name, synapse_model_name = NESTCodeGeneratorUtils.generate_code_for("../../../models/neurons/iaf_psc_delta_neuron.nestml",

nestml_stdp_dopa_model,

post_ports=["post_spikes"],

mod_ports=["mod_spikes"],

logging_level="INFO")

[1,GLOBAL, INFO]: List of files that will be processed:

[2,GLOBAL, INFO]: /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/iaf_psc_delta_neuron.nestml

[3,GLOBAL, INFO]: /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/neuromodulated_stdp_synapse.nestml

[4,GLOBAL, INFO]: Target platform code will be generated in directory: '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target'

[5,GLOBAL, INFO]: Target platform code will be installed in directory: '/tmp/nestml_target_2nbwam92'

-- N E S T --

Copyright (C) 2004 The NEST Initiative

Version: 3.6.0-post0.dev0

Built: Mar 26 2024 08:52:51

This program is provided AS IS and comes with

NO WARRANTY. See the file LICENSE for details.

Problems or suggestions?

Visit https://www.nest-simulator.org

Type 'nest.help()' to find out more about NEST.

[6,GLOBAL, INFO]: The NEST Simulator version was automatically detected as: master

[7,GLOBAL, INFO]: Given template root path is not an absolute path. Creating the absolute path with default templates directory '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/pynestml/codegeneration/resources_nest/point_neuron'

[8,GLOBAL, INFO]: Given template root path is not an absolute path. Creating the absolute path with default templates directory '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/pynestml/codegeneration/resources_nest/point_neuron'

[9,GLOBAL, INFO]: Given template root path is not an absolute path. Creating the absolute path with default templates directory '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/pynestml/codegeneration/resources_nest/point_neuron'

[10,GLOBAL, INFO]: The NEST Simulator installation path was automatically detected as: /home/charl/julich/nest-simulator-install

[11,GLOBAL, INFO]: Start processing '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/iaf_psc_delta_neuron.nestml'!

[13,iaf_psc_delta_neuron_nestml, INFO, [51:79;51:79]]: Implicit magnitude conversion from pA to pA buffer with factor 1.0

[14,iaf_psc_delta_neuron_nestml, INFO, [51:15;51:74]]: Implicit magnitude conversion from mV / ms to pA / pF with factor 1.0

[15,GLOBAL, INFO]: Start processing '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/neuromodulated_stdp_synapse.nestml'!

[17,neuromodulated_stdp_synapse_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[18,neuromodulated_stdp_synapse_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[19,neuromodulated_stdp_synapse_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

[22,neuromodulated_stdp_synapse_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[23,neuromodulated_stdp_synapse_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[24,neuromodulated_stdp_synapse_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

[26,iaf_psc_delta_neuron_nestml, INFO, [51:79;51:79]]: Implicit magnitude conversion from pA to pA buffer with factor 1.0

[27,iaf_psc_delta_neuron_nestml, INFO, [51:15;51:74]]: Implicit magnitude conversion from mV / ms to pA / pF with factor 1.0

[29,neuromodulated_stdp_synapse_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[30,neuromodulated_stdp_synapse_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[31,neuromodulated_stdp_synapse_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

[32,GLOBAL, INFO]: State variables that will be moved from synapse to neuron: ['post_tr']

[33,GLOBAL, INFO]: Parameters that will be copied from synapse to neuron: ['tau_tr_post']

[34,GLOBAL, INFO]: Moving state var defining equation(s) post_tr

[35,GLOBAL, INFO]: Moving state variables for equation(s) post_tr

[36,GLOBAL, INFO]: Moving definition of post_tr from synapse to neuron

[37,GLOBAL, INFO]: Moving statement post_tr += 1.0

[38,GLOBAL, INFO]: In synapse: replacing ``continuous`` type input ports that are connected to postsynaptic neuron with suffixed external variable references

[39,GLOBAL, INFO]: Copying parameters from synapse to neuron...

[40,GLOBAL, INFO]: Copying definition of tau_tr_post from synapse to neuron

[41,GLOBAL, INFO]: Adding suffix to variables in spike updates

[42,GLOBAL, INFO]: In synapse: replacing variables with suffixed external variable references

[43,GLOBAL, INFO]: • Replacing variable post_tr

[44,GLOBAL, INFO]: ASTSimpleExpression replacement made (var = post_tr__for_neuromodulated_stdp_synapse_nestml) in expression: A_minus * post_tr

[47,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[48,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[49,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

INFO:Analysing input:

INFO:{

"dynamics": [

{

"expression": "V_m' = (-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m",

"initial_values": {

"V_m": "E_L"

}

}

],

"options": {

"output_timestep_symbol": "__h"

},

"parameters": {

"C_m": "250",

"E_L": "(-70)",

"I_e": "0",

"V_min": "(-oo) * 1",

"V_reset": "(-70)",

"V_th": "(-55)",

"refr_T": "2",

"tau_m": "10",

"tau_syn": "2"

}

}

INFO:Processing global options...

INFO:Processing input shapes...

INFO:

Processing differential-equation form shape V_m with defining expression = "(-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m"

INFO: Returning shape: Shape "V_m" of order 1

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:All known variables: [V_m], all parameters used in ODEs: {tau_m, I_e, C_m, I_stim, E_L}

INFO:No numerical value specified for parameter "I_stim"

INFO:

Processing differential-equation form shape V_m with defining expression = "(-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m"

INFO: Returning shape: Shape "V_m" of order 1

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:Finding analytically solvable equations...

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph.dot

[50,GLOBAL, INFO]: Successfully constructed neuron-synapse pair iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml, neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml

[51,GLOBAL, INFO]: Analysing/transforming model 'iaf_psc_delta_neuron_nestml'

[52,iaf_psc_delta_neuron_nestml, INFO, [43:0;94:0]]: Starts processing of the model 'iaf_psc_delta_neuron_nestml'

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable_before_propagated.dot

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable.dot

INFO:Generating propagators for the following symbols: V_m

INFO:update_expr[V_m] = -E_L*__P__V_m__V_m + E_L + V_m*__P__V_m__V_m - I_e*__P__V_m__V_m*tau_m/C_m + I_e*tau_m/C_m - I_stim*__P__V_m__V_m*tau_m/C_m + I_stim*tau_m/C_m

WARNING:Not preserving expression for variable "V_m" as it is solved by propagator solver

INFO:In ode-toolbox: returning outdict =

INFO:[

{

"initial_values": {

"V_m": "E_L"

},

"parameters": {

"C_m": "250.000000000000",

"E_L": "-70.0000000000000",

"I_e": "0",

"tau_m": "10.0000000000000"

},

"propagators": {

"__P__V_m__V_m": "exp(-__h/tau_m)"

},

"solver": "analytical",

"state_variables": [

"V_m"

],

"update_expressions": {

"V_m": "-E_L*__P__V_m__V_m + E_L + V_m*__P__V_m__V_m - I_e*__P__V_m__V_m*tau_m/C_m + I_e*tau_m/C_m - I_stim*__P__V_m__V_m*tau_m/C_m + I_stim*tau_m/C_m"

}

}

]

INFO:Analysing input:

INFO:{

"dynamics": [

{

"expression": "V_m' = (-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m",

"initial_values": {

"V_m": "E_L"

}

},

{

"expression": "post_tr__for_neuromodulated_stdp_synapse_nestml' = (-post_tr__for_neuromodulated_stdp_synapse_nestml) / tau_tr_post__for_neuromodulated_stdp_synapse_nestml",

"initial_values": {

"post_tr__for_neuromodulated_stdp_synapse_nestml": "0.0"

}

}

],

"options": {

"output_timestep_symbol": "__h"

},

"parameters": {

"C_m": "250",

"E_L": "(-70)",

"I_e": "0",

"V_min": "(-oo) * 1",

"V_reset": "(-70)",

"V_th": "(-55)",

"refr_T": "2",

"tau_m": "10",

"tau_syn": "2",

"tau_tr_post__for_neuromodulated_stdp_synapse_nestml": "20"

}

}

INFO:Processing global options...

INFO:Processing input shapes...

INFO:

Processing differential-equation form shape V_m with defining expression = "(-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m"

INFO: Returning shape: Shape "V_m" of order 1

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:

Processing differential-equation form shape post_tr__for_neuromodulated_stdp_synapse_nestml with defining expression = "(-post_tr__for_neuromodulated_stdp_synapse_nestml) / tau_tr_post__for_neuromodulated_stdp_synapse_nestml"

INFO: Returning shape: Shape "post_tr__for_neuromodulated_stdp_synapse_nestml" of order 1

INFO:Shape post_tr__for_neuromodulated_stdp_synapse_nestml: reconstituting expression -post_tr__for_neuromodulated_stdp_synapse_nestml/tau_tr_post__for_neuromodulated_stdp_synapse_nestml

INFO:All known variables: [V_m, post_tr__for_neuromodulated_stdp_synapse_nestml], all parameters used in ODEs: {tau_m, I_e, C_m, I_stim, E_L, tau_tr_post__for_neuromodulated_stdp_synapse_nestml}

INFO:No numerical value specified for parameter "I_stim"

INFO:

Processing differential-equation form shape V_m with defining expression = "(-(V_m - E_L)) / tau_m + 0 * (1.0 / 1.0) + (I_e + I_stim) / C_m"

INFO: Returning shape: Shape "V_m" of order 1

INFO:

Processing differential-equation form shape post_tr__for_neuromodulated_stdp_synapse_nestml with defining expression = "(-post_tr__for_neuromodulated_stdp_synapse_nestml) / tau_tr_post__for_neuromodulated_stdp_synapse_nestml"

INFO: Returning shape: Shape "post_tr__for_neuromodulated_stdp_synapse_nestml" of order 1

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:Shape post_tr__for_neuromodulated_stdp_synapse_nestml: reconstituting expression -post_tr__for_neuromodulated_stdp_synapse_nestml/tau_tr_post__for_neuromodulated_stdp_synapse_nestml

INFO:Finding analytically solvable equations...

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph.dot

[54,GLOBAL, INFO]: Analysing/transforming model 'iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml'

[55,iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml, INFO, [43:0;94:0]]: Starts processing of the model 'iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml'

INFO:Shape V_m: reconstituting expression E_L/tau_m - V_m/tau_m + I_e/C_m + I_stim/C_m

INFO:Shape post_tr__for_neuromodulated_stdp_synapse_nestml: reconstituting expression -post_tr__for_neuromodulated_stdp_synapse_nestml/tau_tr_post__for_neuromodulated_stdp_synapse_nestml

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable_before_propagated.dot

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable.dot

INFO:Generating propagators for the following symbols: V_m, post_tr__for_neuromodulated_stdp_synapse_nestml

INFO:update_expr[V_m] = -E_L*__P__V_m__V_m + E_L + V_m*__P__V_m__V_m - I_e*__P__V_m__V_m*tau_m/C_m + I_e*tau_m/C_m - I_stim*__P__V_m__V_m*tau_m/C_m + I_stim*tau_m/C_m

INFO:update_expr[post_tr__for_neuromodulated_stdp_synapse_nestml] = __P__post_tr__for_neuromodulated_stdp_synapse_nestml__post_tr__for_neuromodulated_stdp_synapse_nestml*post_tr__for_neuromodulated_stdp_synapse_nestml

WARNING:Not preserving expression for variable "V_m" as it is solved by propagator solver

WARNING:Not preserving expression for variable "post_tr__for_neuromodulated_stdp_synapse_nestml" as it is solved by propagator solver

INFO:In ode-toolbox: returning outdict =

INFO:[

{

"initial_values": {

"V_m": "E_L",

"post_tr__for_neuromodulated_stdp_synapse_nestml": "0.0"

},

"parameters": {

"C_m": "250.000000000000",

"E_L": "-70.0000000000000",

"I_e": "0",

"tau_m": "10.0000000000000",

"tau_tr_post__for_neuromodulated_stdp_synapse_nestml": "20.0000000000000"

},

"propagators": {

"__P__V_m__V_m": "exp(-__h/tau_m)",

"__P__post_tr__for_neuromodulated_stdp_synapse_nestml__post_tr__for_neuromodulated_stdp_synapse_nestml": "exp(-__h/tau_tr_post__for_neuromodulated_stdp_synapse_nestml)"

},

"solver": "analytical",

"state_variables": [

"V_m",

"post_tr__for_neuromodulated_stdp_synapse_nestml"

],

"update_expressions": {

"V_m": "-E_L*__P__V_m__V_m + E_L + V_m*__P__V_m__V_m - I_e*__P__V_m__V_m*tau_m/C_m + I_e*tau_m/C_m - I_stim*__P__V_m__V_m*tau_m/C_m + I_stim*tau_m/C_m",

"post_tr__for_neuromodulated_stdp_synapse_nestml": "__P__post_tr__for_neuromodulated_stdp_synapse_nestml__post_tr__for_neuromodulated_stdp_synapse_nestml*post_tr__for_neuromodulated_stdp_synapse_nestml"

}

}

]

INFO:Analysing input:

INFO:{

"dynamics": [

{

"expression": "pre_tr' = (-pre_tr) / tau_tr_pre",

"initial_values": {

"pre_tr": "0.0"

}

}

],

"options": {

"output_timestep_symbol": "__h"

},

"parameters": {

"A_minus": "1.5",

"A_plus": "1.0",

"A_vt": "1.0",

"Wmax": "200.0",

"Wmin": "0.0",

"b": "0.0",

"d": "1",

"tau_c": "1000",

"tau_n": "200",

"tau_tr_post": "20",

"tau_tr_pre": "20"

}

}

INFO:Processing global options...

INFO:Processing input shapes...

INFO:

Processing differential-equation form shape pre_tr with defining expression = "(-pre_tr) / tau_tr_pre"

INFO: Returning shape: Shape "pre_tr" of order 1

INFO:Shape pre_tr: reconstituting expression -pre_tr/tau_tr_pre

INFO:All known variables: [pre_tr], all parameters used in ODEs: {tau_tr_pre}

INFO:

Processing differential-equation form shape pre_tr with defining expression = "(-pre_tr) / tau_tr_pre"

INFO: Returning shape: Shape "pre_tr" of order 1

INFO:Shape pre_tr: reconstituting expression -pre_tr/tau_tr_pre

INFO:Finding analytically solvable equations...

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph.dot

INFO:Shape pre_tr: reconstituting expression -pre_tr/tau_tr_pre

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable_before_propagated.dot

[57,GLOBAL, INFO]: Analysing/transforming synapse neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.

[58,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [2:0;66:0]]: Starts processing of the model 'neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml'

INFO:Saving dependency graph plot to /tmp/ode_dependency_graph_analytically_solvable.dot

INFO:Generating propagators for the following symbols: pre_tr

INFO:update_expr[pre_tr] = __P__pre_tr__pre_tr*pre_tr

WARNING:Not preserving expression for variable "pre_tr" as it is solved by propagator solver

INFO:In ode-toolbox: returning outdict =

INFO:[

{

"initial_values": {

"pre_tr": "0.0"

},

"parameters": {

"tau_tr_pre": "20.0000000000000"

},

"propagators": {

"__P__pre_tr__pre_tr": "exp(-__h/tau_tr_pre)"

},

"solver": "analytical",

"state_variables": [

"pre_tr"

],

"update_expressions": {

"pre_tr": "__P__pre_tr__pre_tr*pre_tr"

}

}

]

[60,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[61,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[62,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

[64,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, WARNING, [12:8;12:28]]: Variable 'd' has the same name as a physical unit!

[65,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [40:13;40:20]]: Implicit casting from (compatible) type '1 / ms' to 'real'.

[66,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [63:13;63:123]]: Implicit casting from (compatible) type 'ms' to 'real'.

[67,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp

[68,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.h

[69,iaf_psc_delta_neuron_nestml, INFO, [43:0;94:0]]: Successfully generated code for the model: 'iaf_psc_delta_neuron_nestml' in: '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target' !

[70,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp

[71,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.h

[72,iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml, INFO, [43:0;94:0]]: Successfully generated code for the model: 'iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml' in: '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target' !

Generating code for the synapse neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.

[73,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h

[74,neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml, INFO, [2:0;66:0]]: Successfully generated code for the model: 'neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml' in: '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target' !

[75,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.cpp

[76,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.h

[77,GLOBAL, INFO]: Rendering template /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/CMakeLists.txt

[78,GLOBAL, INFO]: Successfully generated NEST module code in '/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target' !

CMake Warning (dev) at CMakeLists.txt:95 (project):

cmake_minimum_required() should be called prior to this top-level project()

call. Please see the cmake-commands(7) manual for usage documentation of

both commands.

This warning is for project developers. Use -Wno-dev to suppress it.

-- The CXX compiler identification is GNU 12.3.0

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-------------------------------------------------------

nestml_9557cf48dd76469a8f5dc4ea86b34c42_module Configuration Summary

-------------------------------------------------------

C++ compiler : /usr/bin/c++

Build static libs : OFF

C++ compiler flags :

NEST compiler flags : -std=c++17 -Wall -fopenmp -O2 -fdiagnostics-color=auto

NEST include dirs : -I/home/charl/julich/nest-simulator-install/include/nest -I/usr/include -I/usr/include -I/usr/include

NEST libraries flags : -L/home/charl/julich/nest-simulator-install/lib/nest -lnest -lsli /usr/lib/x86_64-linux-gnu/libltdl.so /usr/lib/x86_64-linux-gnu/libgsl.so /usr/lib/x86_64-linux-gnu/libgslcblas.so /usr/lib/gcc/x86_64-linux-gnu/12/libgomp.so /usr/lib/x86_64-linux-gnu/libpthread.a

-------------------------------------------------------

You can now build and install 'nestml_9557cf48dd76469a8f5dc4ea86b34c42_module' using

make

make install

The library file libnestml_9557cf48dd76469a8f5dc4ea86b34c42_module.so will be installed to

/tmp/nestml_target_2nbwam92

The module can be loaded into NEST using

(nestml_9557cf48dd76469a8f5dc4ea86b34c42_module) Install (in SLI)

nest.Install(nestml_9557cf48dd76469a8f5dc4ea86b34c42_module) (in PyNEST)

CMake Warning (dev) in CMakeLists.txt:

No cmake_minimum_required command is present. A line of code such as

cmake_minimum_required(VERSION 3.26)

should be added at the top of the file. The version specified may be lower

if you wish to support older CMake versions for this project. For more

information run "cmake --help-policy CMP0000".

This warning is for project developers. Use -Wno-dev to suppress it.

-- Configuring done (0.5s)

-- Generating done (0.0s)

-- Build files have been written to: /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target

[ 25%] Building CXX object CMakeFiles/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module_module.dir/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.o

[ 50%] Building CXX object CMakeFiles/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module_module.dir/iaf_psc_delta_neuron_nestml.o

[ 75%] Building CXX object CMakeFiles/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module_module.dir/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.o

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp: In member function ‘void iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml::init_state_internal_()’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp:183:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

183 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp: In member function ‘virtual void iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml::update(const nest::Time&, long int, long int)’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp:287:24: warning: comparison of integer expressions of different signedness: ‘long int’ and ‘const size_t’ {aka ‘const long unsigned int’} [-Wsign-compare]

287 | for (long i = 0; i < NUM_SPIKE_RECEPTORS; ++i)

| ~~^~~~~~~~~~~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml.cpp:282:10: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

282 | auto get_t = [origin, lag](){ return nest::Time( nest::Time::step( origin.get_steps() + lag + 1) ).get_ms(); };

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp: In member function ‘void iaf_psc_delta_neuron_nestml::init_state_internal_()’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp:173:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

173 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp: In member function ‘virtual void iaf_psc_delta_neuron_nestml::update(const nest::Time&, long int, long int)’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp:266:24: warning: comparison of integer expressions of different signedness: ‘long int’ and ‘const size_t’ {aka ‘const long unsigned int’} [-Wsign-compare]

266 | for (long i = 0; i < NUM_SPIKE_RECEPTORS; ++i)

| ~~^~~~~~~~~~~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/iaf_psc_delta_neuron_nestml.cpp:261:10: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

261 | auto get_t = [origin, lag](){ return nest::Time( nest::Time::step( origin.get_steps() + lag + 1) ).get_ms(); };

| ^~~~~

In file included from /home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.cpp:36:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml() [with targetidentifierT = nest::TargetIdentifierPtrRport]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_model.h:164:25: required from ‘nest::GenericConnectorModel<ConnectionT>::GenericConnectorModel(std::string) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:62:5: required from ‘void nest::ModelManager::register_connection_model(const std::string&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/nest_impl.h:37:70: required from ‘void nest::register_connection_model(const std::string&) [with ConnectorModelT = neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:671:106: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:862:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

862 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::recompute_internal_variables() [with targetidentifierT = nest::TargetIdentifierPtrRport]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:876:3: required from ‘nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml() [with targetidentifierT = nest::TargetIdentifierPtrRport]’

/home/charl/julich/nest-simulator-install/include/nest/connector_model.h:164:25: required from ‘nest::GenericConnectorModel<ConnectionT>::GenericConnectorModel(std::string) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:62:5: required from ‘void nest::ModelManager::register_connection_model(const std::string&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/nest_impl.h:37:70: required from ‘void nest::register_connection_model(const std::string&) [with ConnectorModelT = neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:671:106: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:849:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

849 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml() [with targetidentifierT = nest::TargetIdentifierIndex]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_model.h:164:25: required from ‘nest::GenericConnectorModel<ConnectionT>::GenericConnectorModel(std::string) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:103:34: required from ‘void nest::ModelManager::register_specific_connection_model_(const std::string&) [with CompleteConnecionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:67:80: required from ‘void nest::ModelManager::register_connection_model(const std::string&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/nest_impl.h:37:70: required from ‘void nest::register_connection_model(const std::string&) [with ConnectorModelT = neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:671:106: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:862:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

862 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::recompute_internal_variables() [with targetidentifierT = nest::TargetIdentifierIndex]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:876:3: required from ‘nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml() [with targetidentifierT = nest::TargetIdentifierIndex]’

/home/charl/julich/nest-simulator-install/include/nest/connector_model.h:164:25: required from ‘nest::GenericConnectorModel<ConnectionT>::GenericConnectorModel(std::string) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:103:34: required from ‘void nest::ModelManager::register_specific_connection_model_(const std::string&) [with CompleteConnecionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/model_manager_impl.h:67:80: required from ‘void nest::ModelManager::register_connection_model(const std::string&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nest-simulator-install/include/nest/nest_impl.h:37:70: required from ‘void nest::register_connection_model(const std::string&) [with ConnectorModelT = neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml; std::string = std::__cxx11::basic_string<char>]’

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:671:106: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:849:16: warning: unused variable ‘__resolution’ [-Wunused-variable]

849 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierPtrRport; size_t = long unsigned int]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:589:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

589 | auto get_t = [_tr_t](){ return _tr_t; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:614:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

614 | auto get_t = [__t_spike](){ return __t_spike; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:649:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

649 | auto get_t = [__t_spike](){ return __t_spike; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:517:18: warning: unused variable ‘__resolution’ [-Wunused-variable]

517 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:519:10: warning: variable ‘get_thread’ set but not used [-Wunused-but-set-variable]

519 | auto get_thread = [tid]()

| ^~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::trigger_update_weight(size_t, const std::vector<nest::spikecounter>&, double, const CommonPropertiesType&) [with targetidentifierT = nest::TargetIdentifierPtrRport; size_t = long unsigned int; CommonPropertiesType = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:446:38: required from ‘void nest::Connector<ConnectionT>::trigger_update_weight(long int, size_t, const std::vector<nest::spikecounter>&, double, const std::vector<nest::ConnectorModel*>&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:433:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:1009:18: warning: unused variable ‘_tr_t’ [-Wunused-variable]

1009 | const double _tr_t = start->t_;

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:991:10: warning: unused variable ‘timestep’ [-Wunused-variable]

991 | double timestep = 0;

| ^~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierIndex; size_t = long unsigned int]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:589:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

589 | auto get_t = [_tr_t](){ return _tr_t; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:614:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

614 | auto get_t = [__t_spike](){ return __t_spike; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:649:14: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

649 | auto get_t = [__t_spike](){ return __t_spike; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:517:18: warning: unused variable ‘__resolution’ [-Wunused-variable]

517 | const double __resolution = nest::Time::get_resolution().get_ms(); // do not remove, this is necessary for the resolution() function

| ^~~~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:519:10: warning: variable ‘get_thread’ set but not used [-Wunused-but-set-variable]

519 | auto get_thread = [tid]()

| ^~~~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::trigger_update_weight(size_t, const std::vector<nest::spikecounter>&, double, const CommonPropertiesType&) [with targetidentifierT = nest::TargetIdentifierIndex; size_t = long unsigned int; CommonPropertiesType = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties]’:

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:446:38: required from ‘void nest::Connector<ConnectionT>::trigger_update_weight(long int, size_t, const std::vector<nest::spikecounter>&, double, const std::vector<nest::ConnectorModel*>&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:433:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:1009:18: warning: unused variable ‘_tr_t’ [-Wunused-variable]

1009 | const double _tr_t = start->t_;

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:991:10: warning: unused variable ‘timestep’ [-Wunused-variable]

991 | double timestep = 0;

| ^~~~~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::process_mod_spikes_spikes_(const std::vector<nest::spikecounter>&, double, double, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierPtrRport]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:563:9: required from ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierPtrRport; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:699:12: warning: unused variable ‘cd’ [-Wunused-variable]

699 | double cd;

| ^~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::update_internal_state_(double, double, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierPtrRport]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:584:9: required from ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierPtrRport; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierPtrRport>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:935:10: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

935 | auto get_t = [t_start](){ return t_start; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::process_mod_spikes_spikes_(const std::vector<nest::spikecounter>&, double, double, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierIndex]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:563:9: required from ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierIndex; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:699:12: warning: unused variable ‘cd’ [-Wunused-variable]

699 | double cd;

| ^~

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h: In instantiation of ‘void nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::update_internal_state_(double, double, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierIndex]’:

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:584:9: required from ‘bool nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<targetidentifierT>::send(nest::Event&, size_t, const nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestmlCommonSynapseProperties&) [with targetidentifierT = nest::TargetIdentifierIndex; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:391:22: required from ‘void nest::Connector<ConnectionT>::send_to_all(size_t, const std::vector<nest::ConnectorModel*>&, nest::Event&) [with ConnectionT = nest::neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml<nest::TargetIdentifierIndex>; size_t = long unsigned int]’

/home/charl/julich/nest-simulator-install/include/nest/connector_base.h:383:3: required from here

/home/charl/julich/nestml-fork-integrate_specific_odes/nestml/doc/tutorials/stdp_dopa_synapse/target/neuromodulated_stdp_synapse_nestml__with_iaf_psc_delta_neuron_nestml.h:935:10: warning: variable ‘get_t’ set but not used [-Wunused-but-set-variable]

935 | auto get_t = [t_start](){ return t_start; }; // do not remove, this is in case the predefined time variable ``t`` is used in the NESTML model

| ^~~~~

[100%] Linking CXX shared module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.so

[100%] Built target nestml_9557cf48dd76469a8f5dc4ea86b34c42_module_module

[100%] Built target nestml_9557cf48dd76469a8f5dc4ea86b34c42_module_module

Install the project...

-- Install configuration: ""

-- Installing: /tmp/nestml_target_2nbwam92/nestml_9557cf48dd76469a8f5dc4ea86b34c42_module.so

Running the simulation in NEST

Let’s define a function that will instantiate a simple network with one presynaptic cell and one postsynaptic cell connected by a single synapse, then run a simulation and plot the results.

[4]:

def run_network(pre_spike_time, post_spike_time, vt_spike_times,

neuron_model_name,

synapse_model_name,

resolution=.1, # [ms]

delay=1., # [ms]

lmbda=1E-6,

sim_time=None, # if None, computed from pre and post spike times

synapse_parameters=None, # optional dictionary passed to the synapse

fname_snip="",

debug=False):

#nest.set_verbosity("M_WARNING")

nest.set_verbosity("M_ALL")

nest.ResetKernel()

nest.Install(module_name)

nest.SetKernelStatus({'resolution': resolution})

# create spike_generators with these times

pre_sg = nest.Create("spike_generator",

params={"spike_times": [pre_spike_time]})

post_sg = nest.Create("spike_generator",

params={"spike_times": [post_spike_time]})

vt_sg = nest.Create("spike_generator",

params={"spike_times": vt_spike_times})

# create volume transmitter

vt = nest.Create("volume_transmitter")

vt_parrot = nest.Create("parrot_neuron")

nest.Connect(vt_sg, vt_parrot)

nest.Connect(vt_parrot, vt, syn_spec={"synapse_model": "static_synapse",

"weight": 1.,

"delay": 1.}) # delay is ignored!

# set up custom synapse models

wr = nest.Create('weight_recorder')

nest.CopyModel(synapse_model_name, "stdp_nestml_rec",

{"weight_recorder": wr[0],

"w": 1.,

"delay": delay,

"receptor_type": 0,

"volume_transmitter": vt,

"tau_tr_pre": 10.,

})

# create parrot neurons and connect spike_generators

pre_neuron = nest.Create("parrot_neuron")

post_neuron = nest.Create(neuron_model_name)

spikedet_pre = nest.Create("spike_recorder")

spikedet_post = nest.Create("spike_recorder")

spikedet_vt = nest.Create("spike_recorder")

#mm = nest.Create("multimeter", params={"record_from" : ["V_m"]})

nest.Connect(pre_sg, pre_neuron, "one_to_one", syn_spec={"delay": 1.})

nest.Connect(post_sg, post_neuron, "one_to_one", syn_spec={"delay": 1., "weight": 9999.})

nest.Connect(pre_neuron, post_neuron, "all_to_all", syn_spec={'synapse_model': 'stdp_nestml_rec'})

#nest.Connect(mm, post_neuron)

nest.Connect(pre_neuron, spikedet_pre)

nest.Connect(post_neuron, spikedet_post)

nest.Connect(vt_parrot, spikedet_vt)

# get STDP synapse and weight before protocol

syn = nest.GetConnections(source=pre_neuron, synapse_model="stdp_nestml_rec")

if synapse_parameters is None:

synapse_parameters = {}

nest.SetStatus(syn, synapse_parameters)

initial_weight = nest.GetStatus(syn)[0]["w"]

np.testing.assert_allclose(initial_weight, 1)

nest.Simulate(sim_time)

updated_weight = nest.GetStatus(syn)[0]["w"]

actual_t_pre_sp = nest.GetStatus(spikedet_pre)[0]["events"]["times"][0]

actual_t_post_sp = nest.GetStatus(spikedet_post)[0]["events"]["times"][0]

pre_spike_times_ = nest.GetStatus(spikedet_pre, "events")[0]["times"]

assert len(pre_spike_times_) == 1 and pre_spike_times_[0] > 0

post_spike_times_ = nest.GetStatus(spikedet_post, "events")[0]["times"]

assert len(post_spike_times_) == 1 and post_spike_times_[0] > 0

vt_spike_times_ = nest.GetStatus(spikedet_vt, "events")[0]["times"]

assert len(vt_spike_times_) == 1 and vt_spike_times_[0] > 0

#dt = actual_t_post_sp - actual_t_pre_sp

dt = 0.

dw = updated_weight

return dt, dw

[5]:

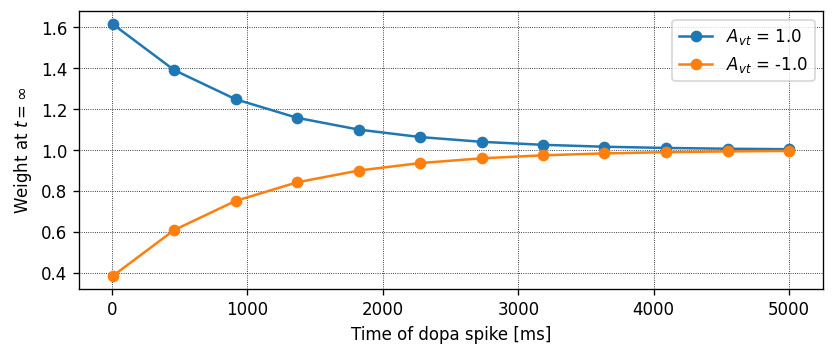

def run_vt_spike_timing_experiment(neuron_model_name, synapse_model_name, synapse_parameters=None):

sim_time = 10000. # [ms] -- make sure to simulate for much longer than the eligibility trace

# time constant, which is typically the slowest time constant in

# the system, PLUS the time of the latest vt spike

pre_spike_time = 1. # [ms]

post_spike_time = 3. # [ms]

delay = .5 # dendritic delay [ms]

dt_vec = []

dw_vec = []

for vt_spike_time in np.round(np.linspace(4, 5000, 12)).astype(float): # sim_time - 10 * delay

dt, dw = run_network(pre_spike_time, post_spike_time, [vt_spike_time],

neuron_model_name,

synapse_model_name,

delay=delay, # [ms]

synapse_parameters=synapse_parameters,

sim_time=sim_time)

dt_vec.append(vt_spike_time)

dw_vec.append(dw)

return dt_vec, dw_vec, delay

[6]:

fig, ax = plt.subplots()

for A_vt in [1., -1.]:

dt_vec, dw_vec, delay = run_vt_spike_timing_experiment(neuron_model_name,

synapse_model_name,

synapse_parameters={"A_vt": A_vt})

ax.plot(dt_vec, dw_vec, marker='o', label="$A_{vt}$ = " + str(A_vt))

ax.set_xlabel("Time of dopa spike [ms]")

ax.set_ylabel("Weight at $t = \infty$")

ax.legend()

Apr 19 11:33:12 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:12 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:12 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:12 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:12 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:12 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:12 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:12 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:12 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:12 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:12 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:12 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:12 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 SimulationManager::set_status [Info]:

Temporal resolution changed from 0.1 to 0.1 ms.

Apr 19 11:33:13 NodeManager::prepare_nodes [Info]:

Preparing 11 nodes for simulation.

Apr 19 11:33:13 SimulationManager::start_updating_ [Info]:

Number of local nodes: 11

Simulation time (ms): 10000

Number of OpenMP threads: 1

Not using MPI

Apr 19 11:33:13 SimulationManager::run [Info]:

Simulation finished.

Apr 19 11:33:13 Install [Info]:

loaded module nestml_9557cf48dd76469a8f5dc4ea86b34c42_module

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

model have been reset!

Apr 19 11:33:13 iaf_psc_delta_neuron_nestml__with_neuromodulated_stdp_synapse_nestml [Warning]:

Simulation resolution has changed. Internal state and parameters of the

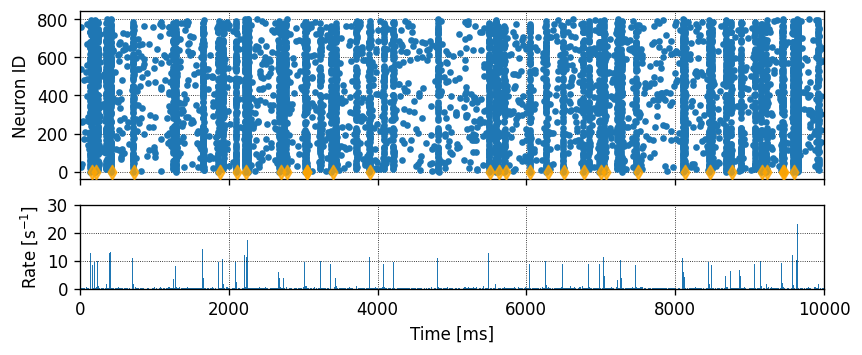

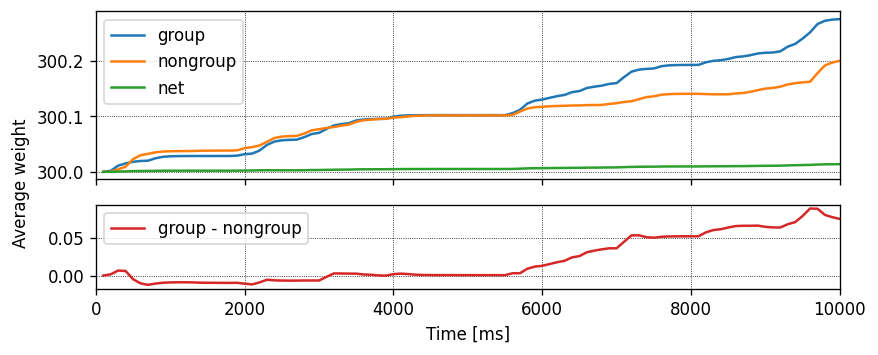

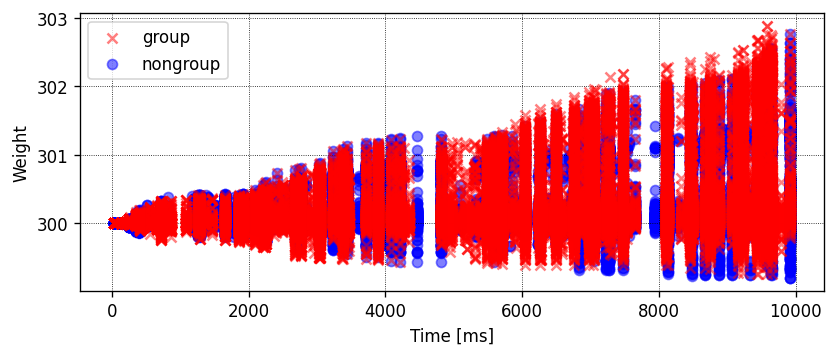

model have been reset!